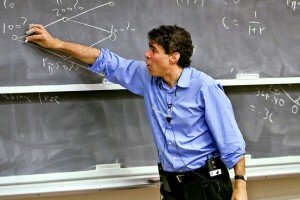

Murray Gell-Mann

Murray Gell-Mann is one of the world’s standout intellectual legends, where he’s known for developing key aspects of the modern theory of quantum physics, but is renowned in many other fields as well. Admitted to Yale at 15, he earned his PhD from MIT at 21; in 1969 he received the Nobel prize (unshared) in Physics. But he has also become an international adviser on the environment, wrote the book “The Quark and the Jaguar”, at last count speaks 13 languages (not all fluently), and has expertise in such varied fields as natural history, historical linguistics, archaeology, bird-watching, and depth psychology. I’m appending excerpts from his background at this post’s end.

I first met Gell-Mann in Aspen CO in the 1980s where we both still have homes and are involved with the Aspen Institute. Beyond most of us on virtually any subject, he is always interesting to talk to, whether in Aspen, Santa Fe, or New York (where at the theatre I recently bumped into him and his lovely companion, designer Mary McFadden).

In a 2001 book by Warren Raisch, “The eMarketplace: Strategies for Success in B2B eCommerce”, I wrote the foreword covering several topics which Gell-Mann and I had discussed years before in Aspen. Because I considered myself a participant in the field of information, I took note when I learned that he was perhaps the first to modify the term “information explosion”, calling it instead the “misinformation explosion” and observing that content is too-often misinformed or poorly organized or even irrelevant, before such trends were broadly recognized!

In fact Gell-Mann was reacting to our later discussion of ‘infoglut’ by stressing what had been recognized by too few, that content is often poorly organized or even irrelevant, and that there is an urgent need for “intermediation” in the knowledge field. Importantly, he called for a reward system which would utilize “processors, not originators” of information to analyze, interpret findings, act as intermediaries, all of which were meant to improve research findings. In physics, Gell-Mann had long ago acknowledged the recognition which awaited scientists who achieved early and positive results from experiments, even if later proven wrong; but what about the importance of continuously clarifying, synthesizing, evaluating and so forth? Little if any reward was generally forthcoming for these essential activities, even though I had to agree that such middlemen in the Advisory industry were a rarity so far as I knew.

This reward system concept was fascinating to me: even if such a ‘middleman’ job description eventually shows up in Advisory, I eventually learned that Murray’s definition of intermediators was different from mine. He was speaking of middlemen who were actually on the top rung of sophistication, reviewing various hypotheses and determining where true value lay, while I was speaking of synthesizers trained in a particular segment of some industry or government sector, who could separate wheat from chaff when reviewing publicly disseminated content or when intelligently administering a discussion between several experts. In my judgment the differences between us lay in seniority, with Murray’s at the top level, and mine somewhere in the middle.

The similarities seemed to me more critical than the differences. My background was nowhere close (in sophistication) to that of physicists; but mine did include Wall Street where many analysts have assistants who manipulate company financial reports, create forecasting spreadsheets, and much more. The clear analogy between the Advisory and Wall Street research models should lead to experimentation in the Advisory setting. Perhaps some combination of analysts, experts, content curators, salespeople who are exposed to technical training or techies with sales training, etc., could help smaller Advisory firms in creating a differentiated and advantageous image (and reality). As the number of industry ‘niches” continues to grow, volumes of information must be transformed to subsets, and so, other methodologies can be developed which would engage a new breed of assistants to enable the new functions. Most analysts with seniority should welcome this because there’s no reason why they should not question assistants’ findings and even possible conclusions, if accomplished constructively and respectfully. The term I’ve used, “intelligent aggregation” (which I believe preceded “curation” in our information field), acknowledges job descriptions where assistants help to least separate good external sources and findings from bad, compute summary data, refine projections, and research alternative sources of data collection or mining. It would especially be valuable if new processes could help in interpretation and evaluation of external points of view, all of which might add value even if contradicting our own conclusions.

While analysts are always encouraged to produce new ideas quite apart from their responding to client questions and issues, common practice which assistants might pursue should also include seeking, evaluating and essentially certifying other content. For example, intermediators who make a point of triangulating several different points of view and then converge on a reasoned conclusion, are adding important value; of course, new conclusions can always be over-ridden by superiors.

The triangulating/converging issue above addresses our ‘infoglut equals misinformation’ problem which leads to another Gell-Mann descriptor: “information trauma”, which suggests that confusion often results from analysis. A similar concept may have been an underlying reason why as soon as Gartner was founded we reduced the previously verbose analyst essays to two-sided notes, with GiGa later reducing the length of added-value research considerably further. After all, while we reduced the volume of content delivered to clients, any level of compound info and data growth results in staggering volumes, especially relative to our limited and fixed capacity to absorb even a small fraction! Ironically we also know that the information glut has become profitable, as channels such as specialized media, think tanks, information services and of course websites and web traffic continue to proliferate creating enormous redundancies, not to speak of conflicts. Unfortunately, the misinformation we’re familiar with remains rampant and may be exploding, resulting in what we and others have called “information anxiety”.

I believe that Gell-Mann’s bottom line was correct. That is, the absolute need for knowledge intermediation processes which include intermediators, may hold one key to future knowledge management and enhancement breakthroughs.

A few of Gell-Mann’s history and physics contributions, from Wikipedia:

- Gell-Mann earned a bachelor’s degree in physics from Yale University in 1948, and a PhD in physics from MIT in 1951. He was a postdoctoral research associate in 1951, and a visiting research professor at University of Illinois at Urbana-Champaign from 1952 to 1953. After serving as Visiting Associate Professor at Columbia University in 1954-55, he became a professor at the University of Chicago before moving to the Caltech, where he taught from 1955 until 1993. He was awarded a Nobel Prize in physics in 1969 for his discovery of a system for classifying subatomic particles.

- He is currently the Robert Andrews Millikan Professor of Theoretical Physics Emeritus at Caltech as well as a University Professor in the Physics and Astronomy Department of the University of New Mexico in Albuquerque, New Mexico. He is a member of the editorial board of the Encyclopædia Britannica. In 1984 Gell-Mann co-founded the Santa Fe Institute — a non-profit research institute in Santa Fe, New Mexico — to study complex systems and disseminate the notion of a separate interdisciplinary study of complexity theory. There he met and befriended Pulitzer Prize-winning novelist Cormac McCarthy.

- With regard to his research [none of which I understand], beside discovering and explaining the quark, Murray Gell-Mann formulated the quark model of hadronic resonances, identified the SU(3) flavor symmetry of the light quarks, and extended isospin to include strangeness. He discovered the V-A theory of chiral neutrinos in collaboration with Richard Feynman, and created ‘current algebra’ in the 1960s as a way of extracting predictions from quark models when the fundamental theory was still murky which led to model-independent sum rules confirmed by experiment. Whew!

- Along with Maurice Levy, Gell-mann discovered the sigma model of pions, which describes low energy pion interactions and joined others in writing down the modern and accepted theory of quantum chromodynamics. Gell-Mann is responsible for the see-saw theory of neutrino masses that produces masses at the inverse-GUT scale in any theory with a right-handed neutrino, like the SO(10) model.

- He is also known to have played a large role in keeping string theory alive through the 1970s, supporting that line of research at a time when it was unpopular.

Read Part I – Information vs Knowledge

Read Part II – Gartner & Forrester and Other Competition

19 Comments